Comments:"Single page apps in depth (a.k.a. Mixu' single page app book)"

URL:http://singlepageappbook.com/single-page.html

This free book is what I wanted when I started working with single page apps. It's not an API reference on a particular framework, rather, the focus is on discussing patterns, implementation choices and decent practices.

I'm taking a "code and concepts" approach to the topic - the best way to learn how to use something is to understand how it is implemented. My ambition here is to decompose the problem of writing a web app, take a fresh look at it and hopefully make better decisions the next time you make one.

Update: the book is now also on Github. I'll be doing a second set of updates to the book later on. Right now, I'm working a new lightweight open source view layer implementation, which has changed and clarified my thinking about the view layer.

Introduction

Writing maintainable code

Implementation alternatives: a look at the options

Meditations on Models & Collections

Views - templating, behavior and event consumption

Why do we want to write single page apps? The main reason is that they allow us to offer a more-native-app-like experience to the user.

This is hard to do with other approaches. Supporting rich interactions with multiple components on a page means that those components have many more intermediate states (e.g. menu open, menu item X selected, menu item Y selected, menu item clicked). Server-side rendering is hard to implement for all the intermediate states - small view states do not map well to URLs.

Single page apps are distinguished by their ability to redraw any part of the UI without requiring a server roundtrip to retrieve HTML. This is achieved by separating the data from the presentation of data by having a model layer that handles data and a view layer that reads from the models.

Most projects start with high ambitions, and an imperfect understanding of the problem at hand. Our implementations tend to outpace our understanding. It is possible to write code without understanding the problem fully; that code is just more complex than it needs to be because of our lack of understanding.

Good code comes from solving the same problem multiple times, or refactoring. Usually, this proceeds by noticing recurring patterns and replacing them with a mechanism that does the same thing in a consistent way - replacing a lot of "case-specific" code, which in fact was just there because we didn't see that a simpler mechanism could achieve the same thing.

The architectures used in single page apps represent the result of this process: where you would do things in an ad-hoc way using jQuery, you now write code that takes advantage of standard mechanisms (e.g. for UI updates etc.).

Programmers are obsessed with ease rather than simplicity (thank you Rich Hickey for making this point); or, what the experience of programming is instead of what the resulting program is like. This leads to useless conversations about semicolons and whether we need a preprocessor that eliminates curly braces. We still talk about programming as if typing in the code was the hard part. It's not - the hard part is maintaining the code.

To write maintainable code, we need to keep things simple. This is a constant struggle; it is easy to add complexity (intertwinedness/dependencies) in order to solve a worthless problem; and it is easy to solve a problem in a way that doesn't reduce complexity. Namespaces are an example of the latter.

With that in mind, let's look at how a modern web app is structured from three different perspectives:

- Architecture: what (conceptual) parts does our app consist of? How do the different parts communicate with each other? How do they depend on each other?

- Asset packaging: how is our app structured into files and files into logical modules? How are these modules built and loaded into the browser? How can the modules be loaded for unit testing?

- Run-time state: when loaded into the browser, what parts of the app are in memory? How do we perform transitions between states and gain visibility into the current state for troubleshooting?

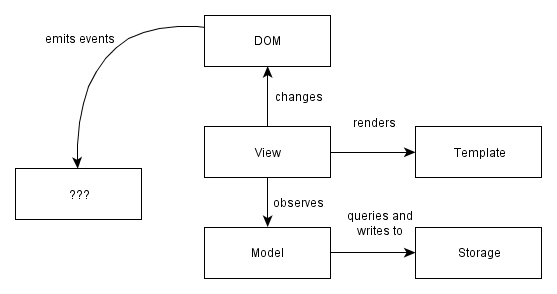

A modern web application architecture

Modern single page apps are generally structured as follows:

More specifically:

Write-only DOM. No state / data is read from the DOM. The application outputs HTML and operations on elements, but nothing is ever read from the DOM. Storing state in the DOM gets hard to manage very quickly: it is much better to have one place where the data lives and to render the UI from the data, particularly when the same data has to be shown in multiple places in the UI.

Models as the single source of truth. Instead of storing data in the DOM or in random objects, there is a set of in-memory models which represent all of the state/data in the application.

Views observe model changes. We want the views to reflect the content of the models. When multiple views depend on a single model (e.g. when a model changes, redraw these views), we don't want to manually keep track of each dependent view. Instead of manually tracking things, there is a change event system through which views receive change notifications from models and handle redrawing themselves.

Decoupled modules that expose small external surfaces. Instead of making things global, we should try to create small subsystems that are not interdependent. Dependencies make code hard to set up for testing. Small external surfaces make refactoring internals easy, since most things can changes as long as the external interface remains the same.

Minimizing DOM dependent-code. Why? Any code that depends on the DOM needs to be tested for cross-browser compatibility. By writing code in a way that isolates those nasty parts, a much more limited surface area needs to be tested for cross-browser compatibility. Cross-browser incompatibilities are a lot more manageable this way. Incompatibilities are in the DOM implementations, not in the Javascript implementations, so it makes sense to minimize and isolate DOM -dependent code.

Controllers must die

There is a reason why I didn't use the word "Controller" in the diagram further above. I don't like that word, so you won't see it used much in this book. My reason is simple: it is just a placeholder that we've carried into the single page app world from having written too many "MVC" server-side apps.

Most current single page application frameworks still use the term "Controller", but I find that it has no meaning beyond "put glue code here". As seen in a presentation:

"Controllers deal with adding and responding to DOM events, rendering templates and keeping views and models in sync".WAT? Maybe we should look at those problems separately?

Single page apps need a better word, because they have more complex state transitions than a server-side app:

- there are DOM events that cause small state changes in views

- there are model events when model values are changed

- there are application state changes that cause views to be swapped

- there are global state changes, like going offline in a real time app

- there are delayed results from AJAX that get returned at some point from backend operations

These are all things that need to be glued together somehow, and the word "Controller" is sadly deficient in describing the coordinator for all these things.

We clearly need a model to hold data and a view to deal with UI changes, but the glue layer consists of several independent problems. Knowing that a framework has a controller tells you nothing about how it solves those problems, so I hope to encourage people to use more specific terms.

That's why this book doesn't have a chapter on controllers; however, I do tackle each of those problems as I go through the view layer and the model layer. The solutions used each have their own terms, such as event bindings, change events, initializers and so on.

Asset packaging (or more descriptively, packaging code for the browser)

Asset packaging is where you take your JS application code and create one or more files (packages) that can be loaded by the browser via script tags.

Nobody seems to emphasize how crucial it is to get this right! Asset packaging is not about speeding up your loading time - it is about making your application modular and making sure that it does not devolve into a untestable mess. Yet people think it is about performance and hence optional.

If there is one part that influences how testable and how refactorable your code is, it is how well you split your code into modules and enforce a modular structure. And that's what "asset packaging" is about: dividing things into modules and making sure that the run-time state does not devolve into a mess. Compare the approaches below:

Messy and random (no modules)

| Packages and modules (modular)

|

The default ("throw each JS file into the global namespace and hope that the result works") is terrible, because it makes unit testing - and by extension, refactoring - hard. This is because bad modularization leads to dependencies on global state and global names which make setting up tests hard.

In addition, implicit dependencies make it very hard to know which modules depend whatever code you are refactoring; you basically rely on other people following good practice (don't depend on things I consider internal details) consistently. Explicit dependencies enforce a public interface, which means that refactoring becomes much less of a pain since others can only depend on what you expose. It also encourages thinking about the public interface more. The details of how to do this are in the chapters on maintainability and modularity.

Run-time state

The third way to look at a modern single page application is to look at its run-time state. Run time state refers to what the app looks like when it is running in your browser - things like what variables contain what information and what steps are involved in moving from one activity (e.g. page) to another.

There are three interesting relationships here:

URL < - > state Single page applications have a schizophrenic relationship with URLs. On the one hand, single page apps exist so that the users can have richer interactions with the application. Richer activities mean that there is more view state than can reasonably fit inside a URL. On the other hand, we'd also like to be able to bookmark a URL and jump back to the same activity.

In order to support bookmarks, we probably need to reduce the level of detail that we support in URLs somewhat. If each page has one primary activity (which is represented in some level of detail in the URL), then each page can be restored from a bookmark to a sufficient degree. The secondary activities (like say, a chat within a webmail application) get reset to the default state on reload, since storing them in a bookmarkable URL is pointless.

Definition < - > initialization Some people still mix these two together, which is a bad idea. Reusable components should be defined without actually being instantiated/activated, because that allows for reuse and for testing. But once we do that, how do we actually perform the initialization/instantiation of various app states?

I think there are three general approaches: one is to have a small function for each module that takes some inputs (e.g. IDs) and instantiates the appropriate views and objects. The other is to have a a global bootstrap file followed by a router that loads the correct state from among the global states. The last one is to wrap everything in sugar that makes instantiation order invisible.

I like the first one; the second one is mostly seen in apps that have organically grown to a point where things start being entangled; the third one is seen in some frameworks, particularly with regards to the view layer.

The reason I like the first one is that I consider state (e.g. instances of objects and variables) to be disgusting and worth isolating in one file (per subsystem - state should be local, not global, but more on that later). Pure data is simple, so are definitions. It is when we have a lot interdependent and/or hard-to-see state that things become complicated; hard to reason about and generally unpleasant.

The other benefit of the first approach is that it doesn't require loading the full application on each page reload. Since each activity is initializable on its own, you can test a single part of the app without loading the full app. Similarly, you have more flexibility in preloading the rest of the app after the initial view is active (vs. at the beginning); this also means that the initial loading time won't increase proportionately to the number of modules your app has.

HTML elements < - > view objects and HTML events < - > view changes

Finally, there is the question of how much visibility we can gain into the run time state of the framework we are using. I haven't seen frameworks address this explicitly (though of course there are tricks): when I am running my application, how can I tell what's going on by selecting a particular HTML element? And when I look at a particular HTML element, how can I tell what will happen when I click it or perform some other action?

Simpler implementations generally fare better, since the distance from a HTML element/event to your view object / event handler is much shorter. I am hoping that frameworks will pay more attention to surfacing this information.

This is just the beginning

So, here we have it: three perspectives - one from the point of view of the architect, one from the view of the filesystem, and finally one from the perspective of the browser.

Modularity is at the core of everything. Initially I had approached this very differently, but it turned out after ~ 20 drafts that nothing else is as important as getting modularization right.

Good modularization makes building and packaging for the browser easy, it makes testing easier and it defines how maintainable the code is. It is the linchpin that makes it possible to write testable, packagable and maintainable code.

What is maintainable code?

- it is easy to understand and troubleshoot

- it is easy to test

- it is easy to refactor

What is hard-to-maintain code?

- it has many dependencies, making it hard to understand and hard to test independently of the whole

- it accesses data from and writes data to the global scope, which makes it hard to consistently set up the same state for testing

- it has side-effects, which means that it cannot be instantiated easily/repeatably in a test

- it exposes a large external surface and doesn't hide its implementation details, which makes it hard to refactor without breaking many other components that depend on that public interface

If you think about it, these statements are either directly about modularizing code properly, or are influenced by the way in which code is divided into distinct modules.

What is modular code?

Modular code is code which is separated into independent modules. The idea is that internal details of individual modules should be hidden behind a public interface, making each module easier to understand, test and refactor independently of others.

Modularity is not just about code organization. You can have code that looks modular, but isn't. You can arrange your code in multiple modules and have namespaces, but that code can still expose its private details and have complex interdependencies through expectations about other parts of the code.

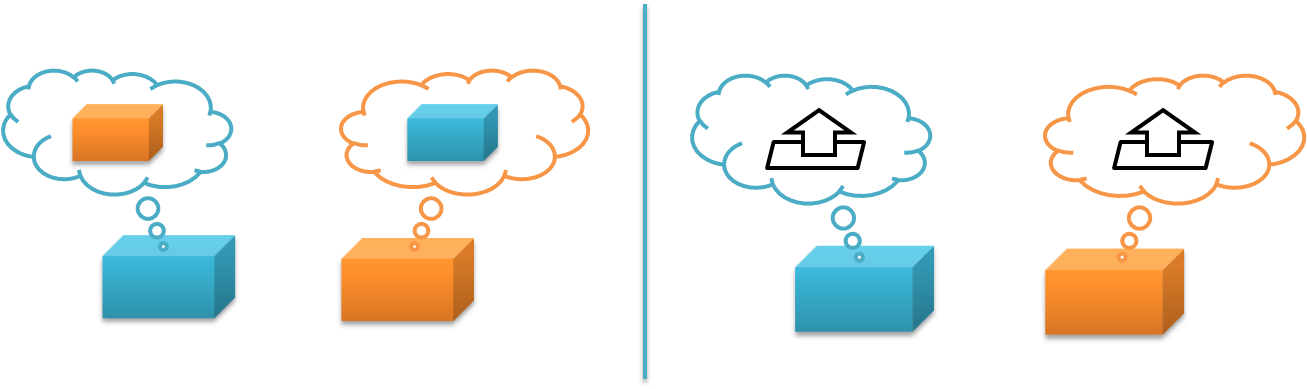

Compare the two cases above (1). In the case on the left, the blue module knows specifically about the orange module. It might refer to the other module directly via a global name; it might use the internal functions of the other module that are carelessly exposed. In any case, if that specific module is not there, it will break.

In the case on the right, each module just knows about a public interface and nothing else about the other module. The blue module can use any other module that implements the same interface; more importantly, as long as the public interface remains consistent the orange module can change internally and can be replaced with a different implementation, such as a mock object for testing.

The problem with namespaces

The browser does not have a module system other than that it is capable of loading files containing Javascript. Everything in the root scope of those files is injected directly into the global scope under the window variable in the same order the script tags were specified.

When people talk about "modular Javascript", what they often refer to is using namespaces. This is basically the approach where you pick a prefix like "window.MyApp" and assign everything underneath it, with the idea that when every object has its own global name, we have achieved modularity. Namespaces do create hierarchies, but they suffer from two problems:

Choices about privacy have to be made on a global basis. In a namespace-only system, you can have private variables and functions, but choices about privacy have to be made on a global basis within a single source file. Either you expose something in the global namespace, or you don't.

This does not provide enough control; with namespaces you cannot expose some detail to "related"/"friendly" users (e.g. within the same subsystem) without making that code globally accessible via the namespace.

This leads to coupling through the globally accessible names. If you expose a detail, you have no control over whether some other piece of code can access and start depending on something you meant to make visible only to a limited subsystem.

We should be able to expose details to related code without exposing that code globally. Hiding details from unrelated modules is useful because it makes it possible to modify the implementation details without breaking dependent code.

Modules become dependent on global state. The other problem with namespaces is that they do not provide any protection from global state. Global namespaces tend to lead to sloppy thinking: since you only have blunt control over visibility, it's easy to fall into the mode where you just add or modify things in the global scope (or a namespace under it).

One of the major causes of complexity is writing code that has remote inputs (e.g. things referred to by global name that are defined and set up elsewhere) or global effects (e.g. where the order in which a module was included affects other modules because it alters global variables). Code written using globals can have a different result depending on what is in the global scope (e.g. window.*).

Modules shouldn't add things to the global scope. Locally scoped data is easier to understand, change and test than globally scoped data. If things need to be put in the global scope, that code should be isolated and become a part of an initialization step. Namespaces don't provide a way to do this; in fact, they actively encourage you to change the global state and inject things into it whenever you want.

Examples of bad practices

The examples below illustrate some bad practices.

Do not leak global variables

Avoid adding variables to the global scope if you don't need to. The snippet below will implicitly add a global variable.

// Bad: adds a global variable called "window.foo" var foo = 'bar';

To prevent variables from becoming global, always write your code in a closure/anonymous function - or have a build system that does this for you:

;(function() {

// Good: local variable is inaccessible from the global scope

var foo = 'bar';

}());If you actually want to register a global variable, then you should make it a big thing and only do it in one specific place in your code. This isolates instantiation from definition, and forces you to look at your ugly state initialization instead of hiding it in multiple places (where it can have surprising impacts):

function initialize(win) {

// Good: if you must have globals,

// make sure you separate definition from instantiation

win.foo = 'bar';

}In the function above, the variable is explicitly assigned to the win object passed to it. The reason this is a function is that modules should not have side-effects when loaded. We can defer calling the initialize function until we really want to inject things into the global scope.

Do not expose implementation details

Details that are not relevant to the users of the module should be hidden. Don't just blindly assign everything into a namespace. Otherwise anyone refactoring your code will have to treat the full set of functions as the public interface until proven differently (the "change and pray" method of refactoring).

Don't define two things (or, oh, horror, more than two things!) in the same file, no matter how convenient it is for you right now. Each file should define and export just one thing.

;(function() {

// Bad: global names = global state

window.FooMachine = {};

// Bad: implementation detail is made publicly accessible

FooMachine.processBar = function () { ... };

FooMachine.doFoo = function(bar) {

FooMachine.processBar(bar);

// ...

};

// Bad: exporting another object from the same file!

// No logical mapping from modules to files.

window.BarMachine = { ... };

})();The code below does it properly: the internal "processBar" function is local to the scope, so it cannot be accessed outside. It also only exports one thing, the current module.

;(function() {

// Good: the name is local to this module

var FooMachine = {};

// Good: implementation detail is clearly local to the closure

function processBar() { ... }

FooMachine.doFoo = function(bar) {

processBar(bar);

// ...

};

// Good: only exporting the public interface,

// internals can be refactored without worrying

return FooMachine;

})();A common pattern for classes (e.g. objects instantiated from a prototype) is to simply mark class methods as private by starting them with a underscore. You can properly hide class methods by using .call/.apply to set "this", but I won't show it here; it's a minor detail.

Do not mix definition and instantiation/initialization

Your code should differentiate between definition and instantiation/initialization. Combining these two together often leads to problems for testing and reusing components.

Don't do this:

function FooObserver() {

// ...

}

var f = new FooObserver();

FooObserver.observe('window.Foo.Bar');

module.exports = FooObserver;While this is a proper module (I'm excluding the wrapper here), it mixes initialization with definition. What you should do instead is have two parts, one responsible for definition, and the other performing the initialization for this particular use case. E.g. foo_observer.js

function FooObserver() {

// ...

}

module.exports = FooObserver;and bootstrap.js:

module.exports = {

initialize: function(win) {

win.Foo.Bar = new Baz();

var f = new FooObserver();

FooObserver.observe('window.Foo.Bar');

}

};Now, FooObserver can be instantiated/initialized separately since we are not forced to initialize it immediately. Even if the only production use case for FooObserver is that it is attached to window.Foo.Bar, this is still useful because setting up tests can be done with different configuration.

Do not modify objects you don't own

While the other examples are about preventing other code from causing problems with your code, this one is about preventing your code from causing problems for other code.

Many frameworks offer a reopen function that allows you to modify the definition of a previously defined object prototype (e.g. class). Don't do this in your modules, unless the same code defined that object (and then, you should just put it in the definition).

If you think class inheritance is a solution to your problem, think harder. In most cases, you can find a better solution by preferring composition over inheritance: expose an interface that someone can use, or emit events that can have custom handlers rather than forcing people to extend a type. There are limited cases where inheritance is useful, but those are mostly limited to frameworks.

;(function() {

// Bad: redefining the behavior of another module

window.Bar.reopen({

// e.g. changing an implementation on the fly

});

// Bad: modifying a builtin type

String.prototype.dasherize = function() {

// While you can use the right API to hide this function,

// you are still monkey-patching the language in a unexpected way

};

})();If you write a framework, for f*ck's sake do not modify built-in objects like String by adding new functions to them. Yes, you can save a few characters (e.g. _(str).dasherize() vs. str.dasherize()), but this is basically the same thing as making your special snowflake framework a global dependency. Play nice with everyone else and be respectful: put those special functions in a utility library instead.

Building modules and packages using CommonJS

Now that we've covered a few common bad practices, let's look at the positive side: how can we implement modules and packages for our single page application?

We want to solve three problems:

- Privacy: we want more granular privacy than just global or local to the current closure.

- Avoid putting things in the global namespace just so they can be accessed.

- We should be able to create packages that encompass multiple files and directories and be able to wrap full subsystems into a single closure.

CommonJS modules. CommonJS is the module format that Node.js uses natively. A CommonJS module is simply a piece of JS code that does two things:

- it uses

require()statements to include dependencies - it assigns to the

exportsvariable to export a single public interface

Here is a simple example foo.js:

var Model = require('./lib/model.js'); // require a dependency

// module implementation

function Foo(){ /* ... */ }

module.exports = Foo; // export a single variableWhat about that var Model statement there? Isn't that in the global scope? No, there is no global scope here. Each module has its own scope. This is like having each module implicitly wrapped in a anonymous function (which means that variables defined are local to the module).

OK, what about requiring jQuery or some other library? There are basically two ways to require a file: either by specifying a file path (like ./lib/model.js) or by requiring it by name: var $ = require('jquery');. Items required by file path are located directly by their name in the file system. Things required by name are "packages" and are searched by the require mechanism. In the case of Node, it uses a simple directory search; in the browser, well, we can define bindings as you will see later.

What are the benefits?

Isn't this the same thing as just wrapping everything in a closure, which you might already be doing? No, not by a long shot.

It does not accidentally modify global state, and it only exports one thing. Each CommonJS module executes in its own execution context. Variables are local to the module, not global. You can only export one object per module.

Dependencies are easy to locate, without being modifiable or accessible in the global scope. Ever been confused about where a particular function comes from, or what the dependencies of a particular piece of code are? Not anymore: dependencies have to be explicitly declared, and locating a piece of code just means looking at the file path in the require statement. There are no implied global variables.

But isn't declaring dependencies redundant and not DRY? Yes, it's not as easy as using global variables implicitly by referring to variables defined under window. But the easiest way isn't always the best choice architecturally; typing is easy, maintenance is hard.

The module does not give itself a name. Each module is anonymous. A module exports a class or a set of functions, but it does not specify what the export should be called. This means that whomever uses the module can give it a local name and does not need to depend on it existing in a particular namespace.

You know those maddening version conflicts that occur when the semantics of include()ing a module modifies the environment to include the module using its inherent name? So you can't have two modules with the same name in different parts of your system because each name may exist only once in the environment? CommonJS doesn't suffer from those, because require() just returns the module and you give it a local name by assigning it to a variable.

It comes with a distribution system. CommonJS modules can be distributed using Node's npm package manager. I'll talk about this more in the next chapter.

There are thousands of compatible modules. Well, I exaggerate, but all modules in npm are CommonJS-based; and while not all of those are meant for the browser, there is a lot of good stuff out there.

Last, but not least: CommonJS modules can be nested to create packages. The semantics of require() may be simple, but it provides the ability to create packages which can expose implementation details internally (across files) while still hiding them from the outside world. This makes hiding implementation details easy, because you can share things locally without exposing them globally.

Creating a CommonJS package

Let's look at how we can create a package from modules following the CommonJS package. Creating a package starts with the build system. Let's just assume that we have a build system, which can take any set of .js files we specify and combine them into a single file.

[ [./model/todo.js] [./view/todo_list.js] [./index.js] ] [ Build process ] [ todo_package.js ]

The build process wraps all the files in closures with metadata, concatenates the output into a single file and adds a package-local require() implementation with the semantics described earlier (including files within the package by path and external libraries by their name).

Basically, we are taking a wrapping closure generated by the build system and extending it across all the modules in the package. This makes it possible to use require() inside the package to access other modules, while preventing external code from accessing those packages.

Here is how this would look like as code:

;(function() {

function require() { /* ... */ }

modules = { 'jquery': window.jQuery };

modules['./model/todo.js'] = function(module, exports, require){

var Dependency = require('dependency');

// ...

module.exports = Todo;

});

modules['index.js'] = function(module, exports, require){

module.exports = {

Todo: require('./model/todo.js')

};

});

window.Todo = require('index.js');

}());There is a local require() that can look up files. Each module exports an external interface following the CommonJS pattern. Finally, the package we have built here itself has a single file index.js that defines what is exported from the module. This is usually a public API, or a subset of the classes in the module (things that are part of the public interface).

Each package exports a single named variable, for example: window.Todo = require('index.js');. This way, only relevant parts of the module are exposed and the exposed parts are obvious. Other packages/code cannot access the modules in another package in any way unless they are exported from index.js. This prevents modules from developing hidden dependencies.

Building an application out of packages

The overall directory structure might look something like this:

assets - css - layouts common - collections - models index.js modules - todo - public - templates - views index.js node_modules package.json server.js

Here, we have a place for shared assets (./assets/); there is a shared library containing reusable parts, such as collections and models (./common).

The ./modules/ directory contains subdirectories, each of which represents an individually initializable part of the application. Each subdirectory is its own package, which can be loaded independently of others (as long as the common libraries are loaded).

The index.js file in each package exports an initialize() function that allows that particular package to be initialized when it is activated, given parameters such as the current URL and app configuration.

Using the glue build system

So, now we have a somewhat detailed spec for how we'd like to build. Node has native support for require(), but what about the browser? We probably need a elaborate library for this?

Nope. This isn't hard: the build system itself is about a hundred fifty lines of code plus another ninety or so for the require() implementation. When I say build, I mean something that is super-lightweight: wrapping code into closures, and providing a local, in-browser require() implementation. I'm not going to put the code here since it adds little to the discussion, but have a look.

I've used onejs and browserbuild before. I wanted something a bit more scriptable, so (after contributing some code to those projects) I wrote gluejs, which is tailored to the system I described above (mostly by having a more flexible API).

With gluejs, you write your build scripts as small blocks of code. This is nice for hooking your build system into the rest of your tools - for example, by building a package on demand when a HTTP request arrives, or by creating custom build scripts that allow you to include or exclude features (such as debug builds) from code.

Let's start by installing gluejs from npm:

$ npm install gluejs

Now let's build something.

Including files and building a package

Let's start with the basics. You use include(path) to add files. The path can be a single file, or a directory (which is included with all subdirectories). If you want to include a directory but exclude some files, use exclude(regexp) to filter files from the build.

You define the name of the main file using main(name); in the code below, it's "index.js". This is the file that gets exported from the package.

var Glue = require('gluejs');

new Glue()

.include('./todo')

.main('index.js')

.export('Todo')

.render(function (err, txt) {

console.log(txt);

});Each package exports a single variable, and that variable needs a name. In the example below, it's "Todo" (e.g. the package is assigned to window.Todo).

Finally, we have a render(callback) function. It takes a function(err, txt) as a parameter, and returns the rendered text as the second parameter of that function (the first parameter is used for returning errors, a Node convention). In the example, we just log the text out to console. If you put the code above in a file (and some .js files in "./todo"), you'll get your first package output to your console.

If you prefer rebuilding the file automatically, use .watch() instead of .render(). The callback function you pass to watch() will be called when the files in the build change.

Binding to global functions

We often want to bind a particular name, like require('jquery') to a external library. You can do this with replace(moduleName, string).

Here is an example call that builds a package in response to a HTTP GET:

var fs = require('fs'),

http = require('http'),

Glue = require('gluejs');

var server = http.createServer();

server.on('request', function(req, res) {

if(req.url == '/minilog.js') {

new Glue()

.include('./todo')

.basepath('./todo')

.replace('jquery', 'window.$')

.replace('core', 'window.Core')

.export('Module')

.render(function (err, txt) {

res.setHeader('content-type', 'application/javascript');

res.end(txt);

});

} else {

console.log('Unknown', req.url);

res.end();

}

}).listen(8080, 'localhost');To concatenate multiple packages into a single file, use concat([packageA, packageB], function(err, txt)):

var packageA = new Glue().export('Foo').include('./fixtures/lib/foo.js');

var packageB = new Glue().export('Bar').include('./fixtures/lib/bar.js');

Glue.concat([packageA, packageB], function(err, txt) {

fs.writeFile('./build.js', txt);

});Note that concatenated packages are just defined in the same file - they do not gain access to the internal modules of each other.

- [1] The modularity illustration was adapted from Rich Hickey's presentation Simple Made Easy

- http://www.infoq.com/presentations/Simple-Made-Easy

- http://blog.markwshead.com/1069/simple-made-easy-rich-hickey/

- http://code.mumak.net/2012/02/simple-made-easy.html

- http://pyvideo.org/video/880/stop-writing-classes

- http://substack.net/posts/b96642

3. Getting to maintainable

In this chapter, I'll look at how the ideas introduced in the previous chapter can be applied to real-world code, both new and legacy. I'll also talk about distributing code by setting up a private NPM server.

Let's start with a some big-picture principles, then talk about legacy - and finally some special considerations for new code. Today's new code is tomorrow's legacy code, so try to avoid falling into bad habits.

Big picture

Use a build system and a module convention that supports granular privacy. Namespaces suck, because they don't let you hide things that are internal to a package within that package. Build systems that don't support this force you to write bad code.

Independent packages/modules. Keep different parts of an app separate: avoid global names and variables, make each part independently instantiable and testable.

Do not intermingle state and definition. You should be able to load a module without causing any side-effects. Isolate code that creates state (instances / variables) in one place, and keep it tiny.

Small external surface. Keep the publicly accessible API small and clearly indicated, as this allows you refactor and reach better implementations.

Extracting modules/packages from your architecture

First, do a basic code quality pass and eliminate the bad practices outlined in the previous chapter.

Then, start moving your files towards using the CommonJS pattern: explicit require()s and only a single export per file.

Next, have a look at your architecture. Try to separate that hairball of code into distinct packages:

Models and other reusable code (shared views/visual components) probably belong in a common package. This is the core of your application on which the rest of the application builds. Treat this like a 3rd party library in the sense that it is a separate package that you need to require() in your other modules. Try to keep the common package stateless. Other packages instantiate things based on it, but the common package doesn't have stateful code itself.

Beyond your core/common package, what are the smallest pieces that make sense? There is probably one for each "primary" activity in your application. To speed up loading your app, you want to make each activity a package that can be loaded independently after the common package has loaded (so that the initial loading time of the application does not increase as the number of packages increases). If your setup is complex, you probably want a single mechanism that takes care of calling the right initializer.

Isolate the state initialization/instantiation code in each package by moving it into one place: the index.js for that particular package (or, if there is a lot of setup, in a separate file - but in one place only). "I hate state, and want as little as possible of it in my code". Export a single function initialize() that accepts setup parameters and sets up the whole module. This allows you to load a package without altering the global state. Each package is like a "mini-app": it should hide its details (non-reusable views, behavior and models).

Rethink your inheritance chains. Classes are a terrible substitute for a use-oriented API in most cases. Extending a class requires that you understand and often depend on the implementation details. APIs consisting of simple functions are superior, so if you can, write an API. The API often looks like a state manipulation library (e.g. add an invite, remove an invite etc.); when instantiated with the related views and the views will generally hook into that API.

Stop inheriting views from each other. Inheritance is mostly inappropriate for views. Sure, inherit from your framework, but don't build elaborate hierarchies for views. Views aren't supposed to have a lot of code in the first place; defining view hierarchies is mostly just done out of bad habit. Inheritance has its uses, but those are fewer and further apart than you think.

Almost every view in your app should be instantiable without depending on any other view. You should identify views that you want to reuse, and move those into a global app-specific module. If the views are not intended to be reused, then they should not be exposed outside of the activity. Reusable views should ideally be documented in an interactive catalog, like Twitter's Bootstrap.

Extract persistent services. These are things that are active globally and maintain state across different activities. For example, a real-time backend and a data cache. But also other user state that is expected to persist across activities, like a list of opened items (e.g. if your app implements tabs within the application).

Refactoring an existing module

Given an existing module,

1. Make sure each file defines and exports one thing. If you define a Account and a related Settings object, put those into two different files.

2. Do not directly/implicitly add variables under window.*. Instead, always assign your export to module.exports. This makes it possible for other modules to use your module without the module being globally accessible under a particular name/namespace.

3. Stop referring to other modules through a global name. Use var $ = require('jquery'), for example, to specify that your module depends on jQuery. If your module requires another local module, require it using the path: var User = require('./model/user.js').

4. Delay concrete instatiation as long as possible by extracting module state setup into a single bootstrap file/function. Defining a module should be separate from running the module. This allows small parts of the system to be tested independently since you can now require your module without running it.

For example, where you previously used to define a class and then immediately assign a instance of that class onto a global variable/namespace in the same file; you should move the instantatiation to a separate bootstrap file/function.

5. If you have submodules (e.g. chat uses backend_service), do not directly expose them to the layer above. Initializing the submodule should be the task of the layer directly above it (and not two layers above it). Configuration can go from a top level initialize() function to initialize() functions in submodules, but keep the submodules of modules out of reach from higher layers.

6. Try to minimize your external surface area.

7. Write package-local tests. Each package should be have unit and integration tests which can be run independently of other packages (other than 3rd party libraries and the common package).

8. Start using npm with semantic versioning for distributing dependencies. Npm makes it easy to distribute and use small modules of Javascript.

Guidelines for new projects

Start with the package.json file.

Add a single bootstrap function. Loading modules should not have side-effects.

Write tests before functionality.

Hide implementation details. Each module should be isolated into its own scope; modules expose a limited public interface and not their implementation details.

Minimize your exports. Small surface area.

Localize dependencies. Modules that are related to each other should be able to work together, while modules that are not related/far from each other should not be able to access each other.

Tooling: npm

Finally, let's talk about distribution. As your projects grow in scope and in modularity, you'll want to be able to load packages from different repositories easily. npm is an awesome tool for creating and distributing small JS modules. If you haven't used it before, Google for a tutorial or read the docs, or check out Nodejitsu's npm cheatsheet. Creating a npm package is simply a matter of following the CommonJS conventions and adding a bit of metadata via a package.json file. Here is an example package.json

{ "name": "modulename",

"description": "Foo for bar",

"version": "0.0.1",

"dependencies": {

"underscore": "1.1.x",

"foo": "git+ssh://git@github.com:mixu/foo.git#0.4.1"

}

}This package can then be installed with all of its dependencies by running npm install. To increment the module version, just run npm version patch (or "minor" or "major").

You can publish your package to npm with one command (but do RTFM before you do so). If you need to keep your code private, you can use git+ssh://user@host:project.git#tag-sha-or-branch to specify dependencies as shown above.

If your packages can be public and reusable by other people, then the public npm registry works. The drawback to using private packages via git is that you don't get the benefits semantic versioning. You can refer to a particular branch or commit sha, but this is less than ideal. If you update your module, then you need to go and bump up the tag or branch in both the project and in its dependencies. This isn't too bad, but ideally, we'd be able to say:

{

"dependencies": { "foo": ">1.x.x" }

}which will automatically select the latest release within the specified major release version.

Right now, your best bet is to install a local version npm if you want to work with semantic version numbers rather than git tags or branches. This involves some CouchDB setup. If you need a read-only cache (which is very useful for speeding up/improving reliability of large simultaneous deploys), have a look at npm_lazy; it uses static files instead of CouchDB for simpler setup. I am working on a private npm server that's easier to set up, but haven't quite gotten it completed due to writing this book. But once it's done, I'll update this section.

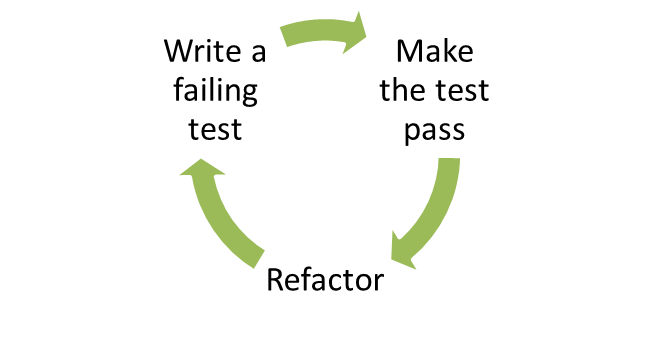

TDD? The best way to make code testable is to start by writing the tests first - TDD style. Essentially, TDD boils down to:

TDD is a set of rules for writing code: you write a failing test (red), then add just enough code to make it pass (green) and finally refactor where necessary (refactor).

In this chapter, we discuss how to set up testing for your project using Mocha, how to do dependency injection for your CommonJS modules, and how you can test asynchronous code. The rest is best covered by some other book or tutorial; so if you haven't heard of TDD, get out from under that rock you've been living under and read Kent Beck's book and perhaps Michael Feather's book.

Why write tests?

Test driven development is not valuable because it catches errors, but because it changes the way you think about interfaces between modules. Writing tests before you write code influences how you think about the public interface of your modules and their coupling, it provides a safety net for performing refactoring and it documents the expected behavior of the system.

In most cases, you don't completely understand the system when you start writing it. Writing something once produces just a rough draft. You want to be able to improve the code while ensuring that existing code does not break. That's what tests are for: they tell you what expectations you need to fulfill while refactoring.

What to test?

Test driven development implies that tests should guide the development. I often use tests as TODO's when developing new functionality; no code is written until I know how the code should look like in the test. Tests are a contract: this is what this particular module needs to provide externally.

I find that the greatest value comes from testing pure logic and otherwise-hard-to-replicate edge cases. I tend not to test internal details (where you test the actual implementation rather than the public interface). I also avoid testing things that are hard to set up for testing; testing is a tool, not a goal in itself. This is why it is important to have good modularization and few dependencies: the easier your code is to test, the more likely it is that someone will want to write tests for it. For views, I'd test the logic (easy to test/easy to have errors in) and try to make it so that it can be tested separately from any visual properties (hard to test without a human looking at stuff).

Test frameworks

Use any test framework/runner exceptJasmine, which is terrible for asynchronous testing due to the amount of boilerplate code it requires.

Test runners basically use one of three different styles for specifying tests:

- BDD:

describe(foo) .. before() .. it() - TDD:

suite(foo) .. setup() .. test(bar) - and exports:

exports['suite'] = { before: f() .. 'foo should': f() }

I like TJ's Mocha, which has a lot of awesome features, such as support for all three specification styles, support for running tests in the browser, code coverage, Growl integration, documentation generation, airplane mode and a nyan cat test reporter. I like to use the "exports" style - it is the simplest thing that works.

Some frameworks require you to use their assert() methods, Mocha doesn't. I use Node's built-in assert module for writing my assertions. I'm not a fan of the "assertions-written-out-as-sentences" -style; plain asserts are more readable to me since they translate trivially to actual code and it's not like some non-coder is going to go poke around in your test suite.

Setting up and writing a test

Let's set up a Node project with mocha and write a test. First, let's create a directory, initialize the package.json file (for npm) and install mocha:

[~] mkdir example [~] cd example [example] npm init Package name: (example) Description: Example system Package version: (0.0.0) Project homepage: (none) Project git repository: (none) ... [example] npm install --save-dev mocha

I like the exports style for tests:

var assert = require('assert'),

Model = require('../lib/model.js');

exports['can check whether a key is set'] = function(done) {

var model = new Model();

assert.ok(!model.has('foo'));

model.set('foo', 'bar');

assert.ok(model.has('foo'));

done();

};Note the use of the done() function there. You need to call this function at the end of your test to notify the test runner that the test is done. This makes async testing easy, since you can just make that call at the end of your async calls (rather than having a polling mechanism, like Jasmine does).

You can use before/after and beforeEach/afterEach to specify blocks of code that should be run either before/after the whole set of tests or before/after each test:

exports['given a foo'] = {

before: function(done) {

this.foo = new Foo().connect();

done();

},

after: function(done) {

this.foo.disconnect();

done();

},

'can check whether a key is set': function() {

// ...

}

};You can also create nested test suites (e.g. where several sub-tests need additional setup):

exports['given a foo'] = {

beforeEach: function(done) {

// ...

},

'when bar is set': {

beforeEach: function(done) {

// ...

},

'can execute baz': function(done) {

// ...

}

}

};Basic assertions

You can get pretty far with these three:

- assert.ok(value, [message])

- assert.equal(actual, expected, [message])

- assert.deepEqual(actual, expected, [message])

Check out the assert module documentation for more.

Tests should be easy to run

To run the full test suite, I create a Makefile:

TESTS += test/model.test.js test: @./node_modules/.bin/mocha \ --ui exports \ --reporter list \ --slow 2000ms \ --bail \ $(TESTS) .PHONY: test

This way, people can run the tests using "make test". Note that the Makefile requires tabs for indentation.

I also like to make individual test files runnable via node ./path/to/test.js. To do this, I add the following wrapper to detect whether the current module is the main script, and if so, run the tests directly (in this case, using Mocha):

// if this module is the script being run, then run the tests:

if (module == require.main) {

var mocha = require('child_process').spawn('mocha', [ '--colors', '--ui',

'exports', '--reporter', 'spec', __filename ]);

mocha.stdout.pipe(process.stdout);

mocha.stderr.pipe(process.stderr);

}This makes running tests nice, since you no longer need to remember all those default options.

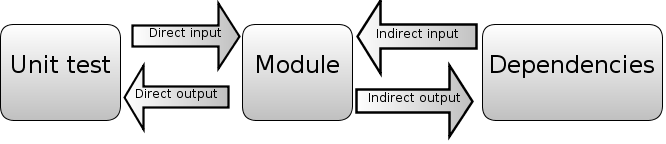

Testing interactions between modules

Unit tests by definition should only test one module at a time. Each unit test excercises one part of the module under test. Some direct inputs (e.g. function parameters) are passed to the module. Once a value is returned, the assertions in the test verify the direct outputs of the test.

However, more complex modules may use other modules: for example, in order to read from a database via function calls (indirect inputs) and write to a database (indirect outputs).

You want to swap the dependency (e.g. the database module) with one that is easier to use for testing purposes. This has several benefits:

- You can capture the indirect outputs (dependency function calls etc.) and control the indirect inputs (e.g. the results returned from the dependency).

- You can simulate error conditions, such as timeouts and connection errors.

- You can avoid having to slow/hard to set up external dependencies, like databases and external APIs.

This is known as dependency injection. The injected dependency (test double) pretends to implement the dependency, replacing it with one that is easier to control from the test. The code being tested is not aware that it is using a test double.

For simple cases, you can just replace a single function in the dependency with a fake one. For example, you want to stub a function call:

exports['it should be called'] = function(done) {

var called = false,

old = Foo.doIt;

Foo.doIt = function(callback) {

called = true;

callback('hello world');

};

// Assume Bar calls Foo.doIt

Bar.baz(function(result)) {

console.log(result);

assert.ok(called);

done();

});

};For more complex cases, you want to replace the whole backend object.

There are two main alternatives: constructor parameter and module substitution.

Constructor parameters

One way to allow for dependency injection is to pass the dependency as a option. For example:

function Channel(options) {

this.backend = options.backend || require('persistence');

};

Channel.prototype.publish = function(message) {

this.backend.send(message);

};

module.exports = Channel;When writing a test, you pass a different parameter to the object being tested instead of the real backend:

var MockPersistence = require('mock_persistence'),

Channel = require('./channel');

var c = new Channel({ backend: MockPersistence });However, this approach is not ideal:

Your code is more cluttered, since you now have to write this.backend.send instead of Persistence.send; you now also to pass in that option though you only need it for testing.

You have to pass that option through any intermediate objects if you are not directly using this class. If you have a hierarchy where Server instantiates Channel which uses Persistence; and you want to capture Persistence calls in a test, then the Server will have accept at channelBackend option or to expose the Channel instance externally.

Module substitution

Another way is to write a function that changes the value of the dependency in the module. For example:

var Persistence = require('persistence');

function Channel() { };

Channel.prototype.publish = function(message) {

Persistence.send(message);

};

Channel._setBackend = function(backend) {

Persistence = backend;

};

module.exports = Channel;Here, the _setBackend function is used to replace the (module-local) private variable Persistence with another (test) object. Since module requires are cached, that private closure and variable can be set for every call to the module, even when the module is required from multiple different files.

When writing a test, we can require() the module to gain access to setBackend() and inject the dependency:

// using in test

var MockPersistence = require('mock_persistence'),

Channel = require('./channel');

exports['given foo'] = {

before: function(done) {

// inject dependency

Channel._setBackend(MockPersistence);

},

after: function(done) {

Channel._setBackend(require('persistence'));

},

// ...

}

var c = new Channel();Using this pattern you can inject a dependency on a per-module basis as needed.

There are other techniques, including creating a factory class (which makes the common case more complex) and redefining require (e.g. using Node's VM API). But I prefer the techniques above. I actually had a more abstract way of doing this, but it turned out to be totally not worth it; _setBackend() is the simplest thing that works.

Testing asynchronous code

Three ways:

- Write a workflow

- Wait for events, continue when expectations fulfilled

- Record events and assert

Writing a workflow is the simplest case: you have a sequence of operations that need to happen, and in your test you set up callbacks (possibly by replacing some functions with callbacks). At the end of the callback chain, you call done(). You probably also want to add an assertion counter to verify that all the callbacks were triggered.

Here is a basic example of a workflow, note how each step in the flow takes a callback (e.g. assume we send a message or something):

exports['can read a status'] = function(done) {

var client = this.client;

client.status('item/21').get(function(value) {

assert.deepEqual(value, []);

client.status('item/21').set('bar', function() {

client.status('item/21').get(function(message) {

assert.deepEqual(message.value, [ 'bar' ]);

done();

});

});

});

};Waiting for events using EventEmitter.when()

In some cases, you don't have a clearly defined order for things to happen. This is often the case when your interface is an EventEmitter. What's an EventEmitter? It's basically just Node's name for an event aggregator; the same functionality is present in many other Javascript projects - for example, jQuery uses .bind()/.trigger() for what is essentially the same thing.

| Node.js EventEmitter | jQuery | |

| Attach a callback to an event | .on(event, callback) / .addListener(event, callback) | .bind(eventType, handler) (1.0) / .on(event, callback) (1.7) |

| Trigger an event | .emit(event, data, ...) | .trigger(event, data, ...) |

| Remove a callback | .removeListener(event, callback) | .unbind(event, callback) / .off(event, callback) |

| Add a callback that is triggered once, then removed | .once(event, callback) | .one(event, callback) |

jQuery's functions have some extra sugar on top, like selectors, but the idea is the same. The usual EventEmitter API is a bit awkward to work with when you are testing for events that don't come in a defined sequence:

- If you use EE.once(), you have to manually reattach the handler in case of misses and manually count.

- If you use EE.on(), you have to manually detach at the end of the test, and you need to have more sophisticated counting.

EventEmitter.when() is a tiny extension to the standard EventEmitter API:

EventEmitter.when = function(event, callback) {

var self = this;

function check() {

if(callback.apply(this, arguments)) {

self.removeListener(event, check);

}

}

check.listener = callback;

self.on(event, check);

return this;

};EE.when() works almost like EE.once(); it takes an event and a callback. The major difference is that the return value of the callback determines whether the callback is removed.

exports['can subscribe'] = function(done) {

var client = this.client;

this.backend.when('subscribe', function(client, msg) {

var match = (msg.op == 'subscribe' && msg.to == 'foo');

if (match) {

assert.equal('subscribe', msg.op);

assert.equal('foo', msg.to);

done();

}

return match;

});

client.connect();

client.subscribe('foo');

};Recording events and then asserting

Recording replacements (a.k.a spies and mocks) are used more frequently when it is not feasible to write a full replacement of the dependency, or when it is more convenient to collect output (e.g from operations that might happen in any order) and then assert that certain conditions are fulfilled.

For example, with an EventEmitter, we might not care in what order certain messages were emitted, just that they were emitted. Here is a simple example using an EventEmitter:

exports['doIt sends a b c'] = function(done) {

var received = [];

client.on('foo', function(msg) {

received.push(msg);

});

client.doIt();

assert.ok(received.some(function(result) { return result == 'a'; }));

assert.ok(received.some(function(result) { return result == 'b'; }));

assert.ok(received.some(function(result) { return result == 'c'; }));

done();

};With the DOM or some other hard-to-mock dependency, we just substitute the function we're calling with another one (possibly via the dependency injection techniques mentioned earlier).

exports['doIt sends a b c'] = function(done) {

var received = [],

old = jQuery.foo;

jQuery.foo = function() {

received.push(arguments);

old.apply(this, Array.prototype.slice(arguments));

});

jQuery.doIt();

assert.ok(received.some(function(result) { return result[1] == 'a'; }));

assert.ok(received.some(function(result) { return result[1] == 'b'; }));

done();

};Here, we are just replacing a function, capturing calls to it, and then calling the original function. Check out MDN on what arguments is, if you're not familiar with it.

In this chapter, I will look at the concepts and differences of opinion between various frameworks when implementing views. I actually started writing this chapter with a code comparison (based on TodoMVC), but decided to remove it - the code you write is mostly very similar, while the underlying mechanisms and abstractions used are different.

The view layer is the most complex part of modern single page app frameworks. After all, this is the whole point of single page apps: make it easy to have awesomely rich and interactive views. As you will see, there are two general approaches to implementing the view layer: one is based around code, and the other is based around markup and having a fairly intricate templating system. These lead to different architectural choices.

Views have several tasks to care of:

- Rendering a template. We need a way to take data, and map it / output it as HTML.

- Updating views in response to change events. When model data changes, we need to update the related view(s) to reflect the new data.

- Binding behavior to HTML via event handlers. When the user interacts with the view HTML, we need a way to trigger behavior (code).

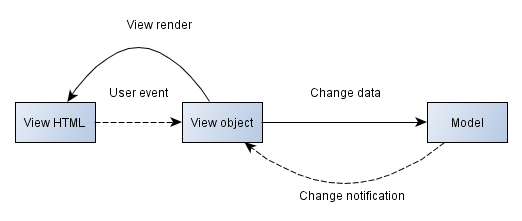

The view layer implementation is expected to provide a standard mechanism or convention to perform these tasks. The diagram below shows how a view might interact with models and HTML while performing these tasks:

There are two questions:

- How should event handlers be bound to/unbound from HTML?

- At what granularity should data updates be performed?

Given the answers to those questions, you can determine how complex your view layer implementation needs to be, and what the output of your templating system should be.

One answer would be to say that event handlers are bound using DOM selectors and data updates "view-granular" (see below). That gives you something like Backbone.js. There are other answers.

In this chapter, I will present a kind of typology for looking at the choices that people have made in writing a view layer. The dimensions/contrasts I look at are:

- Low end interactivity vs. high end interactivity

- Close to server vs. close to client

- Markup-driven views vs. Model-backed views

- View-granular vs. element-granular vs. string-granular updates

- CSS-based vs. framework-generated event bindings

Low-end interactivity vs high-end interactivity

What is your use case? What are you designing your view layer for? I think there are two rough use cases for which you can cater:

Low-end interactivity

| High-end interactivity

|

|

|

Both Github and Gmail are modern web apps, but they have different needs. Github's pages are largely form-driven, with disconnected pieces of data (like a list of branches) that cause a full page refresh; Gmail's actions cause more complex changes: adding and starring a new draft message shows applies the change to multiple places without a page refresh.

Which type of app are you building? Your mileage with different app architectures/frameworks will vary based on what you're trying to achieve.

The way I see it, web apps are a mixture of various kinds of views. Some of those views involve more complicated interactions and benefit from the architecture designed for high-end interactivity. Other views are just simple components that add a bit of interactivity. Those views may be easier and cleaner to implement using less sophisticated methods.

If you never update a piece of data, and it can reasonably fit in the DOM without impacting performance, then it may be good candidate for a low-end approach. For example, collapsible sections and dropdown buttons that never change content but have some basic enhancements might work better as just markup without actually being bound to model data and/or without having an explicit view object.

On the other hand, things that get updated by the user will probably be best implemented using high-end, model-and-view backed objects. It is worth considering what makes sense in your particular use case and how far you can get with low-end elements that don't contain "core data". One way to do this is to maintain catalogue of low-end views/elements for your application, a la Twitter's Bootstrap. These low-end views are distinguished by the fact that they are not bound to / connected to any model data: they just implement simple behavior on top of HTML.

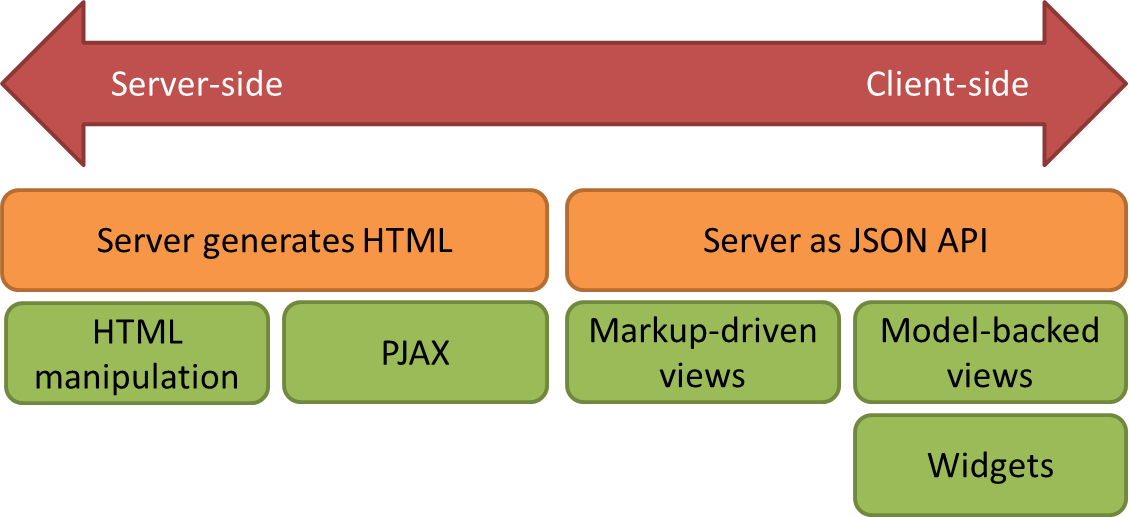

Close to server vs. close to client

Do you want to be closer to the server, or closer to the user? UIs generated on the server side are closer to the database, which makes database access easier/lower latency. UIs that are rendered on the client side put you closer to the user, making responsive/complex UIs easier to develop.

Data in markup/HTML manipulation Data is stored in HTML; you serve up a bunch of scripts that use the DOM or jQuery to manipulate the HTML to provide a richer experience. For example, you have a list of items that is rendered as HTML, but you use a small script that takes that HTML and allows the end user to filter the list. The data is usually read/written from the DOM. (examples: Twitter's Bootstrap; jQuery plugins).

Specific HTML+CSS markup structures are used to to make small parts of the document dynamic. You don't need to write Javascript or only need to write minimal Javascript to configure options. Have a look at Twitter's Bootstrap for a modern example.

This approach works for implementing low-end interactivity, where the same data is never shown twice and where each action triggers a page reload. You can spot this approach by looking for a backend that responds with fully rendered HTML and/or a blob of Javascript which checks for the presence of particular CSS classes and conditionally activates itself (e.g. via event handlers on the root element or via $().live()).

PJAX. You have a page that is generated as HTML. Some user action triggers code that replaces parts of the existing page with new server-generated HTML that you fetch via AJAX. You use PushState or the HTML5 history API to give the appearance of a page change. It's basically "HTML manipulation - Extreme Edition", and comes with the same basic limitations as pure HTML manipulation.

Widgets. The generated page is mostly a loader for Javascript. You instantiate widgets/rich controls that are written in JS and provided by your particular framework. These components can fetch more data from the server via a JSON API. Rendering happens on the client-side, but within the customization limitations of each widget. You mostly work with the widgets, not HTML or CSS. Examples: YUI2, Sproutcore.

Finally, we have markup-driven views and model-backed views.

Markup-driven views vs Model-backed views

If you could choose your ideal case: what should people read in order to understand your application? The markup - or the code?

Frameworks fall into two different camps based on this distinction: the ones where things are done mostly in markup, and ones in which things are mostly done in code.

[ Data in JS models ] [ Data in JS models ] [ Model-backed views ] [ Markup accesses models ]

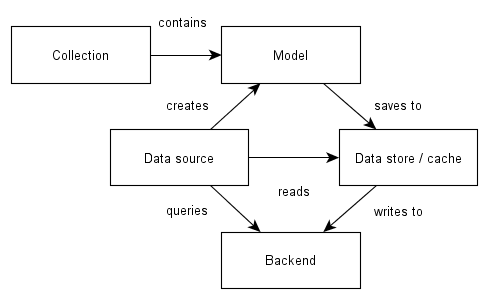

Model-backed views. In this approach, models are the starting point: you instantiate models, which are then bound to/passed to views. The view instances then attach themselves into the DOM, and render their content by passing the model data into a template. To illustrate with code:

var model = new Todo({ title: 'foo', done: false }),

view = new TodoView(model);The idea being that you have models which are bound to views in code.

Markup-driven views. In this approach, we still have views and models, but their relationship is inverted. Views are mostly declared by writing markup (with things like custom attributes and/or custom tags). Again, this might look like this:

{{view TodoView}}

{{=window.model.title}}

{{/view}}The idea being that you have a templating system that generates views and that views access variables directly through a framework-provided mechanism.

In simple cases, there might not even be a directly accessible instance of a view. Instead, views refer to variables in the global scope by their name, "App.Foo.bar" might resolve to a particular model. Views might refer to controllers or observable variables/models by their name.

Two tracks

These two approaches aren't just minor differences, they represent different philosophies and have vastly different complexities in terms of their implementation.

There two general modern single page app (view layer) approaches that start from a difference of view in what is primary: markup or code.

If markup is primary, then one needs to start with a fairly intricate templating system that is capable of generating the metadata necessary to implement the functionality. You still need to translate the templating language into view objects in the background in order to display views and make sure that data is updated. This hides some of the work from the user at the cost of added complexity.

If code is primary, then we accept a bit more verbosity in exchange for a simpler overall implementation. The difference between these two can easily be at least an order of magnitude in terms of the size of the framework code.

View behavior: in view object vs. in controller?

In the model-backed views approach, you tend to think of views as reusable components. Traditional (MVC) wisdom suggests that "skinny controller, fat model" - e.g. put business logic in the model, not in the controller. I'd go even further, and try to get rid of controllers completely - replacing them with view code and initializers (which set up the interactions between the parts).

But isn't writing code in the view bad? No - views aren't just a string of HTML generate (that's the template). In single page apps, views have longer lifecycles and really, the initialization is just the first step in interacting with the user. A generic component that has both presentation and behavior is nicer than one that only works in a specific environment / specific global state. You can then instantiate that component with your specific data from whatever code you use to initialize your state.

In the markup-driven views approach, ideally, there would be no view objects whatsoever. The goal is to have a sufficiently rich templating system that you do not need to have a view object that you instantiate or bind a model to. Instead, views are "thin bindings" with the ability to directly access variables using their names in the global scope; you can write markup-based directives to directly read in those variables and iterate over them. When you need logic, it is mostly for special cases, and that's where you add a controller. The ideal is that views aren't backed by objects, but by the view system/templating metadata (transformed into the appropriate set of bindings).

Controllers are a result of non-reuseable views. If views are just slightly more sophisticated versions of "strings of HTML" (that bind to specific data) rather than objects that represent components, then it is more tempting to put the glue for those bindings in a separate object, the controller. This also has a nice familiar feeling to it from server-side frameworks (request-response frameworks). If you think of views as components that are reusable and consist of a template and a object, then you will more likely want to put behavior in the view object since it represents a singular, reusable thing.

Again, I don't like the word "controller". Occasionally, the distinction is made between "controllers specific to a view" and "controllers responsible for coordinating a particular application state". I'd find "view behavior" and "initialization code" to be more descriptive. I would much rather put the "controller code" specific to a view into the view object, and make the view generic enough to be reusable through configuration and events.

Observables vs. event emitters

Once we have some view behavior, we will want to trigger it when model data changes. The two major options are observables and event emitters.

What's the difference? Basically, in terms of implementation, not much. In both cases, when a change occurs, the code that is interested in that change is triggered. The difference is mostly syntax and implied design patterns. Events are registered on objects:

Todos.on('change', function() { ... });while observers are attached through global names:

Framework.registerObserver(window.App.Todos, 'change', function() { ... });Usually, observable systems also add a global name resolution system, so the syntax becomes:

Framework.observe('App.Todos', function() { ... });Or if you want to be an asshole, you can avoid typing Framework. by extending the native Function object:

function() { ... }.observe('App.Todos');The markup-driven approach tends to lead to observables. Observables often come with a name resolution system, where you refer to things indirectly via strings. The reason why a global name resolution system - where names are strings rather than directly accessing objects - is often added for observables is that setting up observers without it becomes complex, since the observers can only be registered when the objects they refer to have been instantiated. Since there are no guarantees whether a distant object is initialized, there needs to be a level of abstraction where things only get bound once the object is instantiated.

The main reason why I don't particularly like observables is that you need to refer to objects via a globally accessible name. Observables themselves are basically equivalent to event emitters, but they imply that things ought to be referred by global names since without a global name resolution system there would be no meaningful difference between event listeners and observables with observers.

Observables also tend to encourage larger models since model properties are/can be observed directly from views - so it becomes convinient to add more model properties, even if those are specific to a single view. This makes shared models more complex everywhere just to accomodate a particular view, when those properties might more properly be put in a package/module-specific place.

Specifying bindings using DOM vs. having framework-generated element ID's

We will want to also bind to events from the DOM to our views. Since the DOM only has a element-based API for attaching events, there are only two choices:

- DOM-based event bindings.

- Framework-generated event bindings.

DOM-based event bindings basically rely on DOM properties, like the element ID or element class to locate the element and bind events to it. This is fairly similar to the old-fashioned $('#foo').on('click', ...) approach, except done in a standardized way as part of view instantiation.