Comments:"James Somers – Heroku's Ugly Secret | Rap Genius"

URL:http://rapgenius.com/James-somers-herokus-ugly-secret-lyrics

The story of how the cloud-king turned its back on Rails

and swindled its customers

A Rails dyno isn't what it used to be. In mid-2010, Heroku quietly redesigned its routing system, and the change — nowhere documented, nowhere instrumented — radically degraded throughput on the platform. Dollar for dollar a dyno became worth a fraction of its former self.

Rap Genius is blowing up. Traffic is growing, the rate of growth is growing, superstar artists are signing up for their own accounts, smart people have bet on us to the tune of $15M and day by day we are accumulating more of the prize itself, irrepressible meme value:

Of course for the tech side of the house, this means we’re finally running into those problems that everyone says they want to run into. We are finally hitting that point where our optimizations aren’t premature. With nearly 15 million monthly uniques we are, as they say, “at scale.”

Of course for the tech side of the house, this means we’re finally running into those problems that everyone says they want to run into. We are finally hitting that point where our optimizations aren’t premature. With nearly 15 million monthly uniques we are, as they say, “at scale.”

If you had asked us a couple of weeks ago, we would have told you that we were happy to be one of Heroku’s largest customers, happy even to be paying their eye-popping monthly bill (~$20,000). “As devs,” we would have said, “we don’t want to manage infrastructure, we want to build features. If Heroku lets us do that, they’ve earned their keep.”

“Requests are waiting in a queue at the dyno level,” a Heroku engineer told us, “then being served quickly (thus the Rails logs appear fast), but the overall time is slower because of the wait in the queue.”

Waiting in a queue at the dyno level? What?

How you probably think Heroku works

To understand how weird this response was, you first must understand how everyone thinks Heroku works.When someone requests a page from your site, that request first goes through Heroku’s router (they call it the “routing mesh”), which decides which dyno should work on it. The ostensible purpose of the router is to balance load intelligently between dynos, so that a single dyno doesn’t end up working non-stop while the others do nothing. If at any given moment all the dynos are busy, the router should queue the request and give it to the first one that becomes available.

And indeed this is what Heroku claims on their "How it Works" page:

Their documentation tells a similar story. Here's a page from 2009:

Same for New Relic: When it reports “Request Queuing,” it’s talking about time spent at the router. For Rap Genius, on a bad day, that amounts to a tiny imperceptible tax of about 10ms per request.

"Queuing at the Dyno Level"

This is why the Heroku engineer's comment about requests “waiting in a queue at the dyno level” struck us as so bizarre — we were under the impression that this could never happen. The whole point of "intelligent load distribution as you scale" is that you shouldn't send requests to dynos unless they're free! And even if all the dynos are full, it's better for the router to hold on to requests until one frees up (rather than risk stacking them behind slow requests).If you're lucky enough to find the correct doc— a doc that contradicts all the others, and the logs, and the marketing material — you'll find that Heroku replaced its "intelligent load distribution," once a cornerstone of its platform, with "random load distribution":

The routing mesh uses a random selection algorithm for HTTP request load balancing across web processes.

That’s important enough to repeat:

In mid-2010, Heroku redesigned its routing mesh so that new requests would be routed, not to the first available dyno, but randomly, regardless of whether a request was in progress at the destination.

So what?

Why does this matter? Because routing requests randomly is dumb!It would be like if those machines at the Whole Foods checkout line didn’t send you to the first available register, but to a random register where other customers were already standing in line. How much longer would it take to get out of the store? How much more time would the checkout clerks spend idling? If you owned that store and one day the manager, without telling you, replaced your fancy checkout routing system with a pair of dice, and his nightly reports to you never changed — he never told you how long people were waiting at individual registers, that they even could (wasn’t preventing that the whole point of having a routing system?) — that would be bad, right?

In the old regime, which Heroku called “intelligent routing,” a dyno was a dyno was a dyno. When you bought one, you bought a predictable increase in concurrency (the capacity for simultaneous requests). In fact Heroku defines concurrency as "exactly equal to the number of dynos you have running for your app."

But that's no longer true, because the routing system is no longer intelligent. When you route requests randomly — we’ll call this the “naive” approach — concurrency can be significantly less than the number of dynos. That’s because unused dynos only have some probability of seeing a request, and that probability decreases as the number of dynos grows. It’s no longer possible to reliably “soak up” excess load with fresh dynos, because you have no guarantee that requests will find them.

Clearly, under Heroku's random routing approach you need more dynos to achieve the same throughput you had when they routed requests intelligently. But how many more dynos do you need? If your app needed 10 dynos under the old regime, how many does it need under the new regime? 20? If so, Heroku is overcharging you by a factor of 2, which you might playfully refer to as the Heroku Swindle Factor™.Actually, for big apps, it's about FIFTY. That's right — if your app needs 80 dynos with an intelligent router, it needs 4,000 with a random router. So if you're on Rails (or any other single-threaded, one-request-at-a-time framework), Heroku is overcharging you by a factor of 50.

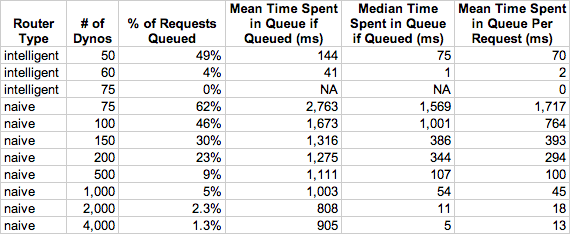

This we discovered by simulating (in R — here's our annotated source) both routing regimes on a model of the Rap Genius application with these properties:

- 9,000 requests per minute (arriving as in a Poisson process)

- Mean request time: 306ms

- Median request time: 46ms

- Request times following this distribution (from a sample of 212k actual Rap Genius requests; use a Weibull distribution to approximate this at home):

1% 5% 10% 25% 50% 75% 90% 99% 99.9%

7ms 8ms 13ms 23ms 46ms 255ms 923ms 3144ms 7962ms

Here are our final aggregated results:

If Heroku were using intelligent routing, an app with 75 dynos that receives 9,000 requests per minute will never have to queue a request. But with a naive (random) router, that same app — with the same number of dynos, the same rate of incoming requests, the same distribution of response times — will now see a 62% queue rate, with a mean queue time of 2.763 seconds. On average each request will spend almost 6x longer in queue than in the app.

And since each additional dyno adds less and less to your app's concurrency (since it's less and less likely to get used), you have to add a lot of dynos to get the queue rate down. In fact to cut your percentage of queued requests by half, you have to double your allotment of dynos. And even as you do that, the average amount of time that queued requests spend in the queue (column 4) stubbornly holds above 1s.

But of course you can’t actually crank your app to 4,000 dynos. For one thing it’d cost over $300k per month. For another, Postgres can’t handle that many simultaneous connections.In fact a routing layer designed for non-blocking, evented, realtime app servers like Node and its ilk— a routing layer that assumes every dyno in a pool is as capable of serving a request as any other — is about as bad as it gets for Rails, where almost the opposite is true: the available dynos are perfectly snappy and the others, until they become available, are useless. The unfortunate conclusion being that Heroku is not appropriate for any Rails app that’s more than a toy.

(If you enjoyed this article, remember: Rap Genius is hiring!)